Welcome to your new hub for all things land investment – the Land Academy Discord community! We have evolved from the LandInvestors.com forum to provide a more interactive, real-time platform for our members. Here, you’ll be able to connect with fellow land investors, participate in dynamic discussions, and tap into a wealth of knowledge from our experienced community. Whether you’re seeking advice, wanting to share your success, or simply wish to stay updated on the latest in the land investment world, our Discord community is your go-to resource. Step into the future of networking and collaboration with the Land Academy Discord. Together, let’s continue our journey towards greater success in land investment.

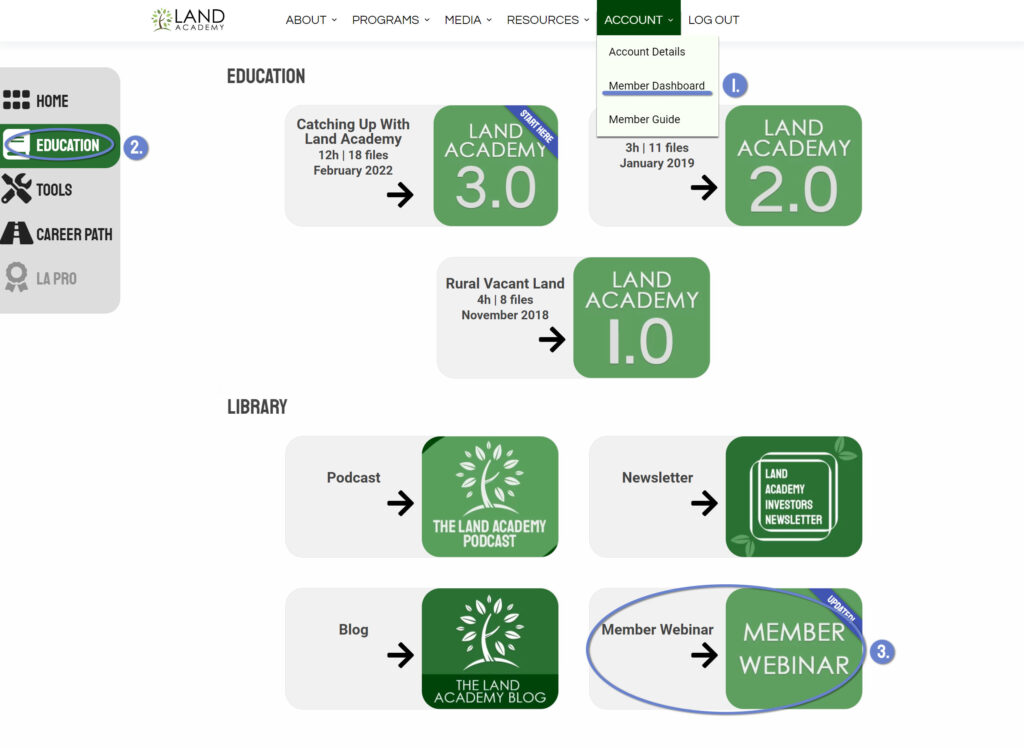

Land Academy PRO is the brainchild of founders Steven Jack Butala and Jill DeWit. Designed at the request of Land Academy members who are ready for a higher level, we’re excited to continue to provide the tools and support needed by professional investors.

Each level comes with a preset amount of included data, Concierge Mail service, and postage. For example, the Green level includes 6,000 units of completed-for-you mail completely out the door at no extra cost to you.

All levels include a PatLive introduction and preset script (we will set up your phone answering for you), use of Land Academy’s personal Transaction Team to manage your deal flow, an AirTable (CRM) base setup managed by our (and your!) Transaction Coordinator, personal consulting, regular office hours, and includes your Land Academy subscription cost.

If you’re making this a business, Land Academy PRO takes the work off of your plate so you can focus on the things that matter – like running your business.

Green$10,060per Month |

Silver |

Gold |

Platinum |

Black |

|

|---|---|---|---|---|---|

|

Concierge Data+ (with data)

Included mailers each month (data + concierge + mailer + postage). Our team will do your data for it and get it out the door.

|

6,000 mailers | 9,000 mailers | 12,000 mailers | 15,000 mailers | 18,000 mailers |

|

PatLive introduction at no cost

We will help you establish your first script and get PatLive set up on your behalf to answer your phones.

|

$500 value | $500 value | $500 value | $500 value | $500 value |

|

Transaction Coordinator

Use of our personal Transaction Coordinator team to manage your deals. Trained and ready to go!

|

$7,500 value | $7,500 value | $7,500 value | $7,500 value | $7,500 value |

|

AirTable

Ready-for-you CRM managed by your personal Land Academy Pro Transaction Coordinator

|

$100 value | $100 value | $100 value | $100 value | $100 value |

|

Personal Consulting

1 on 1 personal consulting with our Transaction Coordinator each week.

|

- | - | $1,000 value | $1,000 value | $1,000 value |

|

Regular Office Hours

Regular office hours with Jack and Jill + our staff. Private for LA Pro Members Only. (Think Career Path Office Hours)

|

$2,500 value | $2,500 value | $2,500 value | $2,500 value | $2,500 value |

|

ParcelFact

ParcelFact is included in your LA Pro membership with unlimited pulls.

|

$150 value | $150 value | $150 value | $150 value | $150 value |

|

FREE Career Path Access

|

$23,000 value | $23,000 value | $23,000 value | $23,000 value | $23,000 value |

|

Land Academy

No more separate charges - Land Academy is included with LA Pro Membership. This includes all education, tools, support, and future releases.

|

$300 value | $300 value | $300 value | $300 value | $300 value |

| Subtotal: | $8,550 value | $8,550 value | $9,550 value | $12,050 value | $12,050 value |

| Mail Value: | $7,500 value | $11,250 value | $15,000 value | $18,750 value | $22,500 value |

| Total Value: | $39,050 | $42,800 | $47,550 | $53,800 | $57,550 |

| Apply Now | Apply Now | Apply Now | Apply Now | Apply Now |

Green$10,060per Month |

|

|---|---|

|

Concierge Data+ (with data)

Included mailers each month (data + concierge + mailer + postage). Our team will do your data for it and get it out the door.

|

6,000 mailers |

|

PatLive introduction at no cost

We will help you establish your first script and get PatLive set up on your behalf to answer your phones.

|

$500 value |

|

Transaction Coordinator

Use of our personal Transaction Coordinator team to manage your deals. Trained and ready to go!

|

$7,500 value |

|

AirTable

Ready-for-you CRM managed by your personal Land Academy Pro Transaction Coordinator

|

$100 value |

|

Personal Consulting

1 on 1 personal consulting with our Transaction Coordinator each week.

|

- |

|

Regular Office Hours

Regular office hours with Jack and Jill + our staff. Private for LA Pro Members Only. (Think Career Path Office Hours)

|

$2,500 value |

|

ParcelFact

ParcelFact is included in your LA Pro membership with unlimited pulls.

|

$150 value |

|

FREE Career Path Access

|

$23,000 value |

|

Land Academy

No more separate charges - Land Academy is included with LA Pro Membership. This includes all education, tools, support, and future releases.

|

$300 value |

| Subtotal: | $8,550 value |

| Mail Value: | $7,500 value |

| Total Value: | $39,050 |

| Apply Now |

Silver$14,590per Month |

|

|---|---|

|

Concierge Data+ (with data)

Included mailers each month (data + concierge + mailer + postage). Our team will do your data for it and get it out the door.

|

9,000 mailers |

|

PatLive introduction at no cost

We will help you establish your first script and get PatLive set up on your behalf to answer your phones.

|

$500 value |

|

Transaction Coordinator

Use of our personal Transaction Coordinator team to manage your deals. Trained and ready to go!

|

$7,500 value |

|

AirTable

Ready-for-you CRM managed by your personal Land Academy Pro Transaction Coordinator

|

$100 value |

|

Personal Consulting

1 on 1 personal consulting with our Transaction Coordinator each week.

|

- |

|

Regular Office Hours

Regular office hours with Jack and Jill + our staff. Private for LA Pro Members Only. (Think Career Path Office Hours)

|

$2,500 value |

|

ParcelFact

ParcelFact is included in your LA Pro membership with unlimited pulls.

|

$150 value |

|

FREE Career Path Access

|

$23,000 value |

|

Land Academy

No more separate charges - Land Academy is included with LA Pro Membership. This includes all education, tools, support, and future releases.

|

$300 value |

| Subtotal: | $8,550 value |

| Mail Value: | $11,250 value |

| Total Value: | $42,800 |

| Apply Now |

Gold$19,120per Month |

|

|---|---|

|

Concierge Data+ (with data)

Included mailers each month (data + concierge + mailer + postage). Our team will do your data for it and get it out the door.

|

12,000 mailers |

|

PatLive introduction at no cost

We will help you establish your first script and get PatLive set up on your behalf to answer your phones.

|

$500 value |

|

Transaction Coordinator

Use of our personal Transaction Coordinator team to manage your deals. Trained and ready to go!

|

$7,500 value |

|

AirTable

Ready-for-you CRM managed by your personal Land Academy Pro Transaction Coordinator

|

$100 value |

|

Personal Consulting

1 on 1 personal consulting with our Transaction Coordinator each week.

|

$1,000 value |

|

Regular Office Hours

Regular office hours with Jack and Jill + our staff. Private for LA Pro Members Only. (Think Career Path Office Hours)

|

$2,500 value |

|

ParcelFact

ParcelFact is included in your LA Pro membership with unlimited pulls.

|

$150 value |

|

FREE Career Path Access

|

$23,000 value |

|

Land Academy

No more separate charges - Land Academy is included with LA Pro Membership. This includes all education, tools, support, and future releases.

|

$300 value |

| Subtotal: | $9,550 value |

| Mail Value: | $15,000 value |

| Total Value: | $47,550 |

| Apply Now |

Platinum$23,650per Month |

|

|---|---|

|

Concierge Data+ (with data)

Included mailers each month (data + concierge + mailer + postage). Our team will do your data for it and get it out the door.

|

15,000 mailers |

|

PatLive introduction at no cost

We will help you establish your first script and get PatLive set up on your behalf to answer your phones.

|

$500 value |

|

Transaction Coordinator

Use of our personal Transaction Coordinator team to manage your deals. Trained and ready to go!

|

$7,500 value |

|

AirTable

Ready-for-you CRM managed by your personal Land Academy Pro Transaction Coordinator

|

$100 value |

|

Personal Consulting

1 on 1 personal consulting with our Transaction Coordinator each week.

|

$1,000 value |

|

Regular Office Hours

Regular office hours with Jack and Jill + our staff. Private for LA Pro Members Only. (Think Career Path Office Hours)

|

$2,500 value |

|

ParcelFact

ParcelFact is included in your LA Pro membership with unlimited pulls.

|

$150 value |

|

FREE Career Path Access

|

$23,000 value |

|

Land Academy

No more separate charges - Land Academy is included with LA Pro Membership. This includes all education, tools, support, and future releases.

|

$300 value |

| Subtotal: | $12,050 value |

| Mail Value: | $18,750 value |

| Total Value: | $53,800 |

| Apply Now |

Black$28,180per Month |

|

|---|---|

|

Concierge Data+ (with data)

Included mailers each month (data + concierge + mailer + postage). Our team will do your data for it and get it out the door.

|

18,000 mailers |

|

PatLive introduction at no cost

We will help you establish your first script and get PatLive set up on your behalf to answer your phones.

|

$500 value |

|

Transaction Coordinator

Use of our personal Transaction Coordinator team to manage your deals. Trained and ready to go!

|

$7,500 value |

|

AirTable

Ready-for-you CRM managed by your personal Land Academy Pro Transaction Coordinator

|

$100 value |

|

Personal Consulting

1 on 1 personal consulting with our Transaction Coordinator each week.

|

$1,000 value |

|

Regular Office Hours

Regular office hours with Jack and Jill + our staff. Private for LA Pro Members Only. (Think Career Path Office Hours)

|

$2,500 value |

|

ParcelFact

ParcelFact is included in your LA Pro membership with unlimited pulls.

|

$150 value |

|

FREE Career Path Access

|

$23,000 value |

|

Land Academy

No more separate charges - Land Academy is included with LA Pro Membership. This includes all education, tools, support, and future releases.

|

$300 value |

| Subtotal: | $12,050 value |

| Mail Value: | $22,500 value |

| Total Value: | $57,550 |

| Apply Now |

Disclaimer: *We have a monthly “use it or lose it” policy with mail and data – Land Academy PRO is designed to keep you on-track and consistent.

To cancel, all packages require a 30 day notice to move you back down to regular Land Academy membership.

Scheduling a Career Path interview call is currently on hold and will resume closer to Fall 2024 as we approach Career Path 10.

Copyright © 2024 All Rights Reserved.

*eBook will automatically be delivered to your inbox. If you do not see it, please check your Spam/Junk Folder.